Contact

Email : Claire.Dune@irisa.fr

Address : IRISA / INRIA Rennes

Campus Universitaire de Beaulieu

35042 Rennes cedex - France

Tel : +33 2 99 84 74 32

Fax : +33 2 99 84 71 71

Assistant : +33 2 99 84 22 52

(CÚline Ammoniaux)

Three years of computer science, electrical engineering and engineering physics including a one year specialization in automation, robotics and vision.

I am currently a PhD student with Eric Marchand at IRISA/INRIA in Rennes. I am working with the Lagadic team to develop a on a vision based grasping tool to assist people with upper-limb disabilities. I am also working with the L.I.S.T lab of the French Atomic Energy Commission at Fontenay-aux-Roses.

You may find further information about the robotic platform and the whole grasping application for disabled people in the web page of my colleague Anthony Remazeilles .

Eric Marchand (IRISA/INRIA Rennes), Christophe Leroux (CEA)

The robotic system is an arm mounted either on a wheelchair or on a mobile platform. It is equipped with two cameras. One is fixed, e.g. on the top of the wheelchair (eye-to-hand) and the other one is mounted on the end effector of the robotic arm (eye-in-hand). The system is fully calibrated . We do not make any assumption about the objects shape or texture, and do not use an appearance database or a model. The only assumptions we make are that the objects are rigid and static in the reference frame. (see figure) |

|

|

The first step towards an autonomous grasping tool is to set the camera poses so as to bring the object in every camera's field of view. We developed a method based on the epipolar geometry of the two camera system. The object is known to lie on a 3D line of view defined by the optical centre of the eye to hand camera and the "clicked" point. The mobile camera (controlled by virtual visual servoing) then scans this line searching for the object. The most likely position is identified with classical image matching techniques. At the end of the process, every camera field of view holds the object. The method has been tested and validated on a robotic cell with a wide range of daily life objects. |

|

|

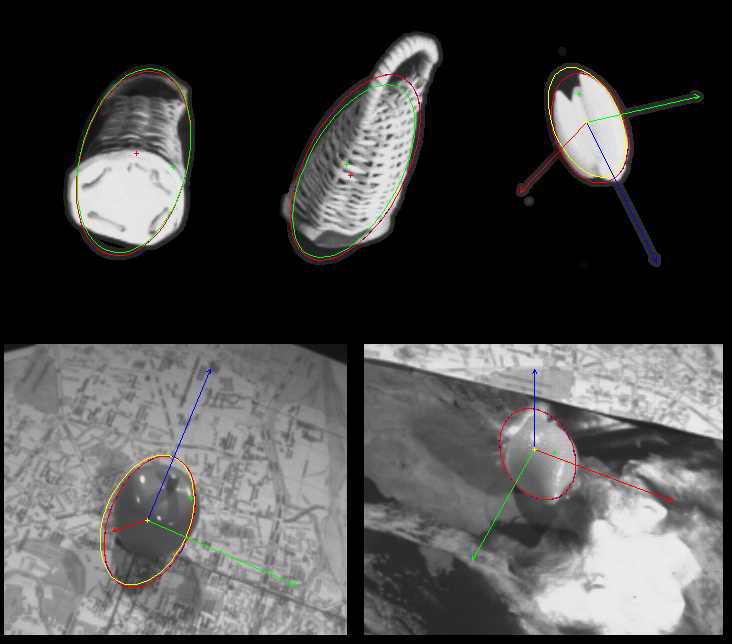

Once both cameras ''see'' the object, the question that remains is: How do we gather sufficient information to reliably grasp for an unmodelled and unknown object using only visual input? We assume that the objects can be grasped by aligning the gripper with their minimal dimension perpendicularly to their main axis and centred on it. Since an accurate 3D reconstruction of the object is not needed to complete such a grasping, we propose computing the best-fit quadric for the object using the contours extracted from several views taken by the mobile camera. The quadric representation holds the advantage of being compact and gives the information needed. We also propose a method to automatically compute the velocity of the mobile camera during the ''reconstruction'' step based on an information criterion. At the end of the process, the object pose is sufficiently known to be able to properly grasp it. |

|

Complete list (with postscript or pdf files if available)