Contact

This page is no longer supporter

Olivier Kermorgant is now Assistant professor with université de Strasbourg (lien)

Contact

|

|

I graduated from École Centrale Paris in 2004, then joined the centre of Arcelor Research in Metz, for process innovation and optimisation in galvanization, hot rolling and general research in experimental design.

Since October 2008, I'm a PhD student under the supervision of François Chaumette. My thesis is financed by the ANR project Scuav and my approach is to develop a sensor fusion framework. Our approach fuses the data directly at the control law level, instead of using it to estimate the robot state. First, online calibration has been studied before using the fusion framework in several visual servoing configurations, including multi-camera cooperation, joint limits avoidance or hybrid 2D/3D visual servoing.

More information in my resume.

The principle of this new framework is to use the kinematic model of the sensor, that links the sensor signal variations to the robot end-effector velocity. During the robot task, these values are linked through the interaction matric (that depends on the sensor intrinsic parameters) and a twist transformation matrix (that depends on the sensor extrinsic parameters). The jacobian of the kinematic model can be expressed, and the sensor can be calibrated by using the features of the performed task.

Low-level fusion stands for combining features coming from different sensors without estimating the robot state. The fusion is performed directly in the sensor space, and the robot velocity is computed in order to minimize the global error, the components of which are the features from the different sensors. This redundancy-based approach leads to several applications.

|

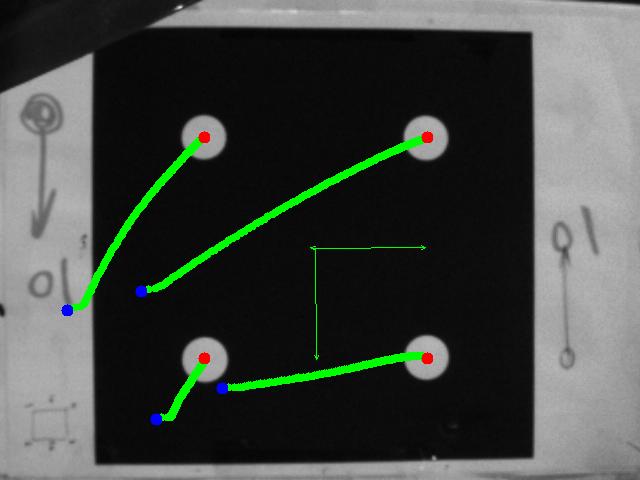

A classical multi-camera configuration is the eye-in-hand / eye-to-hand cooperation. While the eye-in-hand camera is observing a fixed landmark, the eye-to-hand is observing a second landmark that is carried by the robot end effector. A global task can then be defined, using features from both cameras. |

|

|

The joint positions of a robot arm can be seen as a set of features. The fusion formalism can then be applied, and used in the case of joint limits avoidance. The classical avoidance schemes rely on redundancy, while the low-level fusion allows avoiding joint limits for a main task of full rank. Validations are carried on the Viper 6-DOF robot. |

|

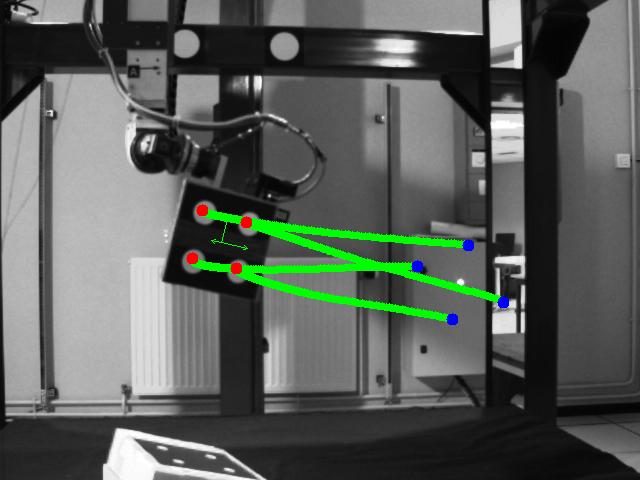

On the same philosophy as joint avoidance, we address the issue of visibility constraint in position-based visual servoing. Adding 2D-information to the task leads to a control law that is very near to PBVS, making it possible to have a nice 3D trajectory while keeping the object in the image field of view. Validation is done on the Afma6 gantry robot. |

Complete list (with postscript or pdf files if available)