Contact: Romeo Tatsambon Fomena

Creation Date: November 2008

Visual servoing is exploited to move mobile robot (see figure below) to a position where the desired pattern of the image of the target can be observed. We use a paracatadioptric camera which combines a parabolic mirror and an orthographic camera. The system (robot and camera) is coarsely calibrated system in order to validate the robustness of visual servoing. The target is a white point. Using such simple object allows to easily compute the selected visual features on the image of the target at video rate without any image processing problem. The desired features have been computed after moving the robot to a position corresponding to the desired image. The depth of the target point is roughly estimated using an approximative value of the desired depth.

|

|

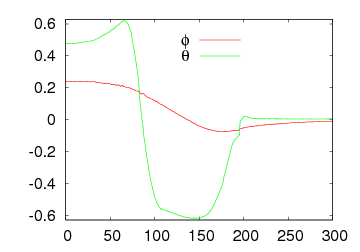

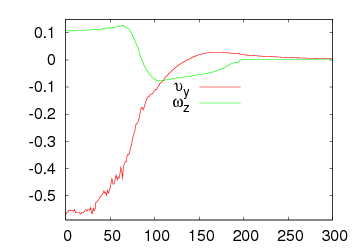

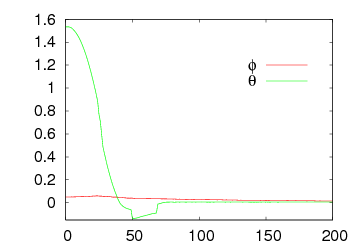

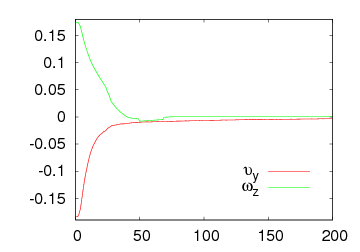

In this case, the gain is set to 0.1. The following figures describe the system behaviour: the figure on the left pictures the trajectory of the visual features error while the figure on the right plots the camera velocities. The high variation on the features related to the rotation is due to the coarse approximation of the system parameters which causes a low compensation on the rotational velocity. However the system converge shiowing the robustness of the decoupled control.

The video for this case is available here

|

|

Here we consider the case where the value of the gain is set to 0.05. The following figures describe the system behaviour: the figure on the left pictures the trajectory of the visual features error while the figure on the right plots the camera velocities. We can see, from the variation of the feature related to the rotation that the compensation on the rotational velocity is satisfactory.

|

This work is concerned with modeling issues in visual servoing. The challenge is to find optimal visual features for visual servoing. The optimality criteria being: local and -as far as possible- global stability of the system, robustness to calibration and to modeling errors, none singularity nor local minima, satisfactory trajectory of the system and of the measures in the image, and finally linear link and maximal decoupling between the visual features and the degrees of freedom taken into account.

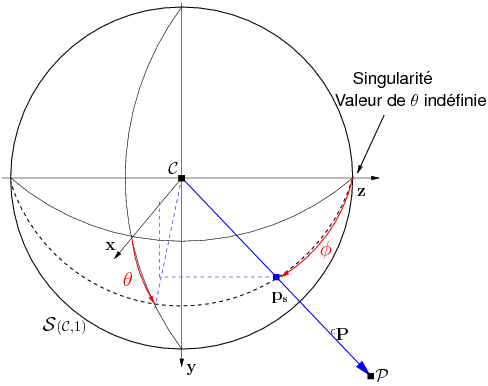

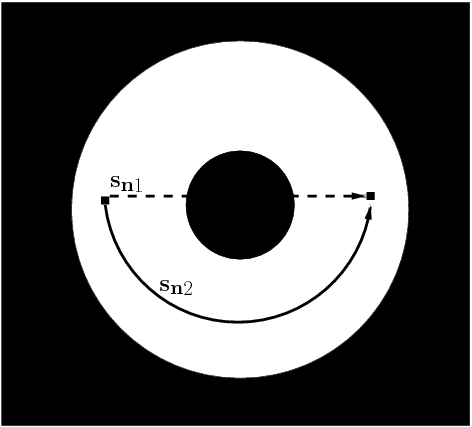

A spherical projection model is proposed to search for optimal visual features. Compared to the perspective or omnidirectional projection models, determining optimal features with this projection model is quite easy and intuitive. The spherical coordinates of the image of the point (see the left figure below) are proposed as a minimal and decoupled set of features. Using this set, a classical control law has been proved to be asymptocially stable even in the presence of depth error. In practice, as shown by the above mentioned experiments results, a rough estimate of the depth is sufficient as well as a coarse system calibration. The new set is suitable for catadioptric camera with dead angle in the center since the corresponding control is able to avoid the dead angle in the case of a 180° rotation (see the right figure below).

|

|

| Lagadic

| Map

| Team

| Publications

| Demonstrations

|

Irisa - Inria - Copyright 2009 © Lagadic Project |