Contact: Romeo Tatsambon Fomena

Creation Date: September 2007

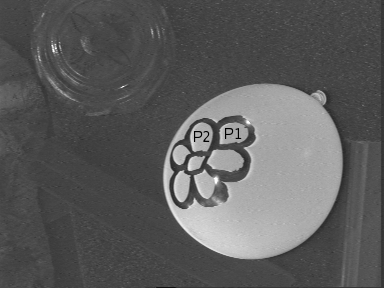

Image-based visual servoing is exploited to move an eye-in-hand system to a position where the desired pattern of the image of the target can be observed. The camera is a mounted on the end-effector of a six degrees of freedom robotic system. The target is 9.5 cm radius white spherical ball marked with a tangent vector to a point on its surface. Using such simple object allows to easily compute the selected visual features on the observed disk (image of the target) at video rate without any image processing prolem. The desired features are computed after moving the robot to a position corresponding to the desired image. The following figures picture the desired (left) and initial (right) images used for the experiment. First of all, we compare the previous set of features proposed for this special target in [1] with the new set of features.

|

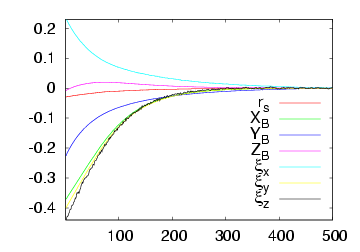

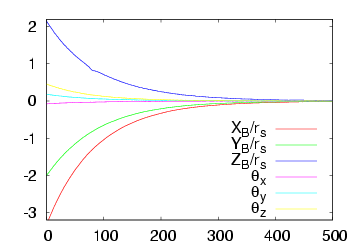

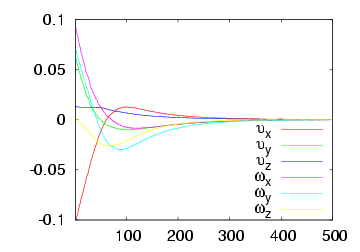

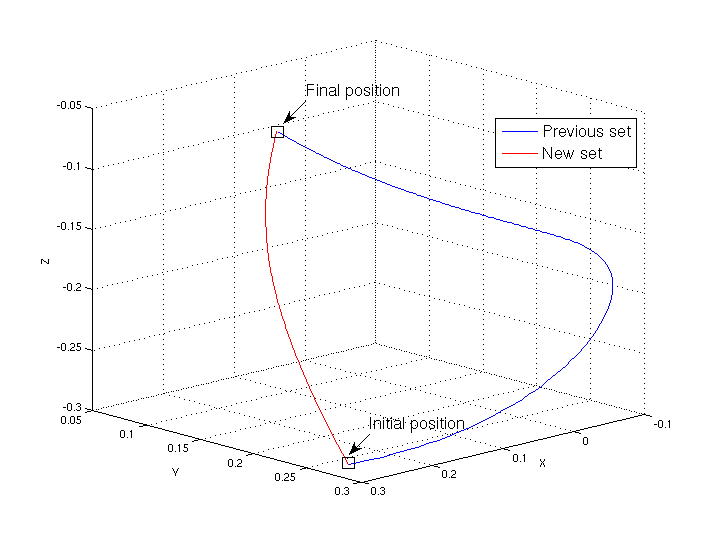

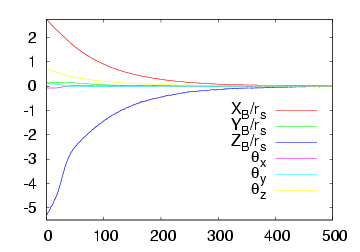

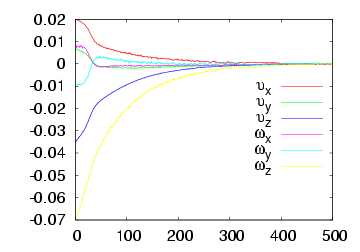

Here we consider the exact value of the radius of the ball and correct camera calibration values. The figures on the left hand side show the system behaviour using the previous set of features [1]; the figures on the right hand side plot the system behaviour using the new set. The top row shows the feature error trajectories and the second row shows the camera velocities. The first part of this video illustrates this comparison. The robot trajectory in the Cartesian space is plotted on the third row. We can note that, using the new set, this trajectory is shorter than using the previous set.

illustrates this comparison. The robot trajectory in the Cartesian space is plotted on the third row. We can note that, using the new set, this trajectory is shorter than using the previous set.

|

|

|

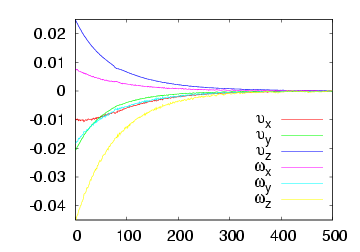

In practice, the value of the radius of the spherical ball is unknown. However, visual servoing still converges: this result is formally proved for a classical control method using the new set of features. Besides we validate this proof using a non-spherical decoration balloon marked with a flower picture in black. The radius of the target is set to 6.5cm. The desired (left) and initial (right) images are shown in the first row. The second row plots the system behaviour. The second part of this video illustrates this application.

illustrates this application.

|

|

This work is concerned with modeling issues in visual servoing. The challenge is to find optimal visual features for visual servoing. By optimality satisfaction of the following criteria is meant: local and -as far as possible- global stability of the system, robustness to calibration and to modeling errors, non-singularity, local minima avoidance, satisfactory motion of the system and of the features in the image, and finally maximal decoupling and linear link (the ultimate goal) between the visual features and the degrees of freedom taken into account.

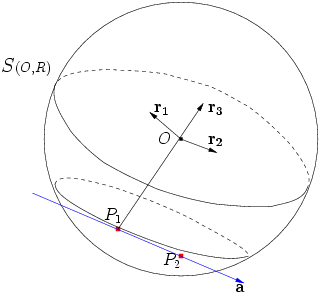

A spherical projection model is proposed to search for optimal visual features. The target is a sphere marked with a tangent vector to a point on its surface (see the figure below). We propose a new minimal set of six visual features which can be computed on any central catadioptric camera (this includes the classical perspective cameras). Using this new combination, a classical image-based control law has been proved to be globally stable to modeling error: in practice, it means that it is not necessary to use the exact value of the radius of the sphere target; a rough estimate is sufficient. In comparison with the previous set proposed for this special target in [1], the new set draws a better system trajectory.

|

|

| Lagadic

| Map

| Team

| Publications

| Demonstrations

|

Irisa - Inria - Copyright 2009 © Lagadic Project |