In 2010, André Seznec was awarded an ERC advanced grant, for the DAL proposal.

This project spanned from 04-2011 to 03-2016 and is now over.

Highlighting a few achievements

Multicore processors have now become mainstream for both general-purpose and embedded computing. Instead of working on improving the architecture of the next generation multicore, with the DAL project, we deliberately anticipate the next few generations of multicores.

While multicores featuring 1000’s of cores might become feasible around 2020, there are strong indications that sequential programming style will continue to be dominant. Even future mainstream parallel applications will exhibit large sequential sections. Amdahl’s law indicates that high performance on these sequential sections is needed to enable overall high performance on the whole application. On many (most) applications, the effective performance of future computer systems using a 1000-core processor chip will significantly depend on their performance on both sequential code sections and single thread.

We envision that, around 2020, the processor chips will feature a few complex cores and many (may be 1000’s) simpler, more silicon and power effective cores.

In the DAL research project, we will explore the microarchitecture techniques that will be needed to enable high performance on such heterogeneous processor chips. Very high performance will be required on both sequential sections -legacy sequential codes, sequential sections of parallel applications- and critical threads on parallel applications -e.g. the main thread controlling the application. Our research will focus on enhancing single process performance. On the microarchitecture side, we will explore both a radically new approach, the sequential accelerator, and more conventional processor architectures. We will also study how to exploit heterogeneous multicore architectures to enhance sequential thread performance.

- André Seznec, principal investigator,

- Pierre Michaud, researcher,

- Erven Rohou, researcher,

- Bharat N. Swany, PhD student, joined Sep 2011, Exploiting heterogeneous manycores on sequential code, graduated March 2015

- Surya Natarajan, PhD student, joined january 2012, What makes parallel code sections and sequential code sections different? graduated June 2015

- Ricardo Velasquez, PhD student, (graduated April 2013)

- Nathanael Premillieu, PhD student (graduated Dec. 2013)

- Arthur Pérais, PhD student, joined Oct 2012, Revisiting superscalar architecture with quasi-unlimited transistor budget, graduated Sept. 2015

- Arjun Suresh, PhD student, joined Nov 2012, Improving single core execution in the many-core era, graduated May 2016

- Sajith Kalathingal, PhD student, joined December 2012, Augmenting superscalar architecture for efficient many-thread parallel execution, to be defended fall 2016

- Andrea Mondelli PhD student, joined October 2013 Sequential Accelerators in Future Manycore Processors, to be defended fall 2016

- Aswinkumar Sridahran, PhD student, joined October 2013, Adaptatative Intelligent Memory Systems, to be defended fall 2016

Highlighting a few achievements

1 Microarchitecture: speculative execution

The ALF team has continued its long trend of research on branch prediction and related topics. On branch prediction per se, at CBP4 (4th Championship Branch Prediction) in 2014, we formalized how to complement a TAGE predictor (introduced in 2006) with a neural branch predictor component. The TAGE-SC predictor [43] won the contest in both large and small limited storage budget contests and the multi-PO-TAGE predictors set limits in the unlimited storage budget contest [19]. More recently, we showed that the TAGE-SC framework allows to capture other forms of branch outcome correlation that the local or global branch history correlation, namely the inner most loop iteration (or IMLI) correlation [38,12].

[38,12] was done in collaboration with Joshua San Miguel and Jorge Albericio from University of Toronto.

Branch Prediction and Performance of Interpreter

Folklore attributes a significant performance penalty to mispredictions on indirect branches in interpreters. In [35], we revisit this assumption, considering state-of-the-art branch predictors and the three most recent Intel processor generations on current interpreters. Using both hardware counters on Haswell, the latest Intel processor generation, and simulation of the ITTAGE predictor, we show that the accuracy of indirect branch prediction is no longer critical for interpreters.

Out-of-order execution of guarded ISA

ARM ISA based processor manufacturers are struggling to implement medium-end to high-end processor cores.Unfortunately providing efficient out-of-order execution on legacy ARM V7 codes may be quite challenging due to guarded instructions.

Predicting the guarded instructions addresses the main serialization impact associated with guarded instructions execution and the multiple definition problem. We introduce a hybrid branch and guard predictor, combining a global branch history component and global branch-and-guard history component. The potential gain or loss due to the systematic use of guard prediction is dynamically evaluated at run-time. This allows to use a relatively inefficient but simple hardware solution on the few unpredicted guarded instructions. Significant performance benefits are observed on most applications while applications with poorly predictable guards do not suffer from performance loss [10, 4].

Value prediction (VP) was proposed in the mid 90’s to enhance the performance of high-end microprocessors. The research on VP techniques almost vanished in the early 2000’s as it was more effective to increase the number of cores than to dedicate some silicon area to VP. However the need for sequential performance is still vivid.

At a first step, we showed that all predictors are amenable to very high accuracy at the cost of some loss on prediction coverage [32]. This greatly diminishes the number of value mispredictions and allows to delay validation until commit-time. This allows to introduce a new microarchitecture, EOLE [7, 31, 8]. EOLE features Early Execution to execute simple instructions whose operands are ready (predicted) in parallel with Rename and Late Execution to execute simple predicted instructions and high confidence branches just before Commit. All these instructions are not sent to out-of-order execution core. EOLE allows to reduce the out-of-order issue-width by 33% while still benefitting from Value Prediction. Furthermore, in-order Late Execution and Early Execution allow optimization of the register file such as banking that further reduce the number of required ports.

We further establish that the Value Predictor per itself can be implemented on the same principles and a front-end instruction fetch mechanisms. In [33], we show that a block-based value prediction scheme mimicking current instruction fetch mechanisms, BeBoP, can efficiently predict values for a fetch block rather than for distinct instructions. Furthermore we introduce the Differential VTAGE predictor, an efficient value predictor with a storage budget in the same storage range (32K-64KB) as a branch predictor.

2 Microarchitecture: pipeline organization

Cost effective scheduling in high performance processors

To maximize performance, out-of-order execution processors sometimes issue instructions without having the guarantee that operands will be available in time; e.g. loads are typically assumed to hit in the L1 cache and dependent instructions are issued assuming a L1 hit. This form of speculation known as speculative scheduling becomes more and more critical as the delay between the Issue and the Execute stages increases. Unfortunately, the scheduler may wrongly issue an instruction that will not have its source(s) on the bypass network when it reaches the Execute stage. Therefore, this instruction must be canceled and replayed, which can potentially impair performance and increase energy consumption.

In [30] we propose simple and efficient mechanisms to reduce the number of replays that are agnostic of the replay scheme associated with the L1 cache hit/miss prediction and the L1 bank conflicts.

This study was done in collaboration with Erik Hagersten’ group from Uppsala University. Erik was visiting DAL for 4 months (09-2014,12-2014).

Criticality-aware Resource Allocation in OOO Processors

Modern processors employ large structures (IQ, LSQ, register file, etc.) to expose instruction-level parallelism (ILP) and memory-level parallelism (MLP). These resources are typically allocated to instructions in program order. In [37], we explore the possibility of allocating pipeline resources only when needed to expose MLP, and thereby enabling a processor design with significantly smaller structures, without sacrificing performance.We identify the classes of instructions that should not reserve resources in program order.We then use this information to ”park” such instructions in a simpler, and therefore more efficient, Long Term Parking (LTP) structure. The LTP stores instructions until they are ready to execute, without allocating pipeline resources, and thereby keeps the pipeline available for instructions that can generate further MLP. LTP uses a simple queue instead of a complex IQ. A simple queue-based LTP design significantly reduces IQ (64 → 32) and register file (128 → 96) sizes while retaining MLP performance and improving energy efficiency.

This study was done in collaboration with Erik Hagersten’ group from Uppsala University. Erik was visiting DAL for 4 months (09-2014,12-2014).

In the last 10 years, the clock frequency of high-end superscalar processors did not increase significantly. Performance keeps being increased mainly by integrating more cores on the same chip and by introducing new instruction set extensions. However, this benefits only to some applications and requires rewriting and/or recompiling these applications. A more general way to increase performance is to increase the IPC, the number of instructions executed per cycle.

In [6], we show some of the benefits of technology scaling should be used to increase the IPC of future superscalar cores. Starting from microarchitecture parameters similar to recent commercial high-end cores, we show that an effective way to increase the IPC is to increase the issue width. But this must be done without impacting the clock cycle. We propose to combine two known techniques: clustering and register write specialization. We show that, on a wide-issue dual cluster, a very simple steering policy that sends 64 consecutive instructions to the same cluster, the next 64 instructions to the other cluster, and so on, permits tolerating an inter-cluster delay of several cycles.

3 Hardware/software approaches

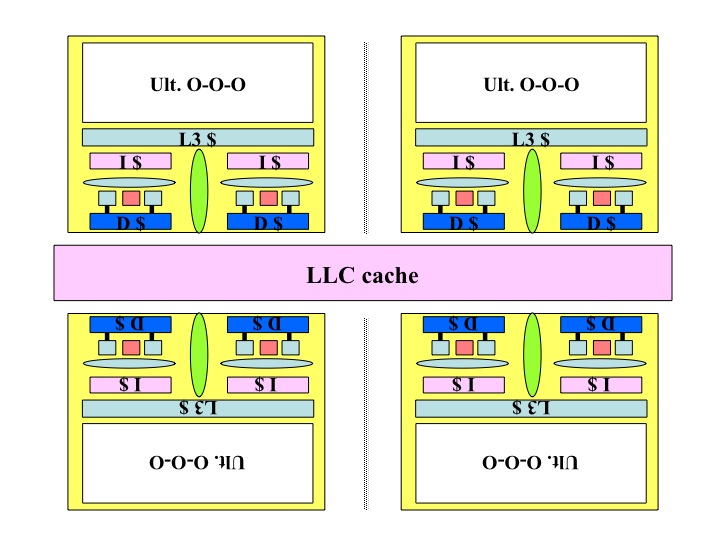

Heterogeneous Many Cores (HMC) architectures that mix many simple/small cores with a few complex/large cores are emerging as a design alternative that can provide both fast sequential performance for single threaded workloads and power-efficient execution for throughput oriented parallel workloads. In [23, 1] we present a hardware/software framework called core-tethering to support efficient helper threading on heterogeneous many-cores. Core-tethering provides a co-processor like interface to the small cores that (a) enables a large core to directly initiate and control helper execution on a small helper core and (b) allows efficient transfer of execution context between the cores, thereby reducing the performance overhead of accessing small cores for helper execution. Despite the latency overheads of accessing prefetched cache lines from the shared L3 cache, helper thread based prefetching on small cores improves single thread performance on memory intensive workloads in HMC architectures.

Augmenting superscalar architecture for efficient many-thread parallel execution

Threads of Single-Program Multiple-Data (SPMD) applications often exhibit very similar control flows, i.e. they execute the same instructions on different data. In [17] we propose the Dynamic Inter-Thread Vectorization Architecture (DITVA) to leverage this implicit Data Level Parallelism on SPMD applications to create dynamic vector instructions at runtime.

DITVA extends an in-order SMT processor with SIMD units with an inter-thread vectorization execution mode. In this mode, identical instructions of several threads running in lockstep are aggregated into a single SIMD instruction. DITVA leverages existing SIMD units and maintains binary compatibility with existing CPU architectures. To balance TLP and DLP, threads are statically grouped into fixed-size warps, inside which threads run in lockstep. At instruction fetch time, if the instruction streams of several threads within a warp are synchronized, then DITVA aggregates the instructions of the threads as dynamic vectors.

DITVA runs unmodified CPU binaries. A dynamic vector instruction is executed as a single unit. This allows to execute m identical instructions from m different threads on m parallel execution lanes while activating the I-fetch, the decode, and the overall pipeline control only once. Our evaluation shows that the DITVA architecture is very energy efficient on most SPMD applications.

4 Mastering the memory hierarchy

Hardware prefetching is an important feature of modern high-performance processors. When an application’s working set is too large to fit in on-chip caches, performance depends heavily on the efficiency of the hardware prefetchers.We propose a new hardware data prefetcher, the Best-Offset (BO) prefetcher. The BO prefetcher is an offset prefetcher using a new method for selecting the best prefetch offset taking into account prefetch timeliness. The hardware required for implementing the BO prefetcher is very simple. The BO prefetcher won the last Data Prefetching Championship [21] and was presented at HPCA 2016 [22].

Ideally, a compressed cache increases effective capacity by tightly compacting compressed blocks, has low tag and metadata overheads, and allows fast lookups. Previous compressed cache designs, however, fail to achieve all these goals. In [36], we propose the Skewed Compressed Cache (SCC), a new hardware compressed cache that lowers overheads and increases performance. SCC tracks super- blocks to reduce tag overhead, compacts blocks into a variable number of sub-blocks to reduce internal fragmentation, but retains a direct tag-data mapping to find blocks quickly and eliminate extra metadata (i.e., no backward pointers). SCC does this using novel sparse super-block tags and a skewed associative mapping that takes compressed size into account. In our experiments, SCC provides on average 8% (up to 22%) higher performance, and on average 6% (up to 20%) lower total energy, at a very limited hardware overhead.

The research on Skewed Compressed Caches [36] was done in collaboration with Somayeh Sardashti and David Wood from University of Wisconsin.

Adaptive Intelligent Memory Systems

Multi-core processors employ shared Last Level Caches (LLC). This trend will continue in the future with large multi-core processors (16 cores and beyond) as well. Consequently, with large multicore processors, the number of cores that share the LLC becomes larger than the associativity of the cache itself. LLC management policies have been extensively studied for small scale multi-cores (4 to 8 cores) and associativity degree in the 16 range. In [45], we introduce Adaptive Discrete and deprioritized Application PrioriTization (ADAPT), an efficient LLC management policy addressing the large multi-cores where the LLC associativity degree is smaller than the number of cores.

4 Microarchitecture Performance Modeling

Selecting benchmark combinations for the evaluation of multicore throughput In [47], we have shown that fast approximate microarchitecture models such as BADCO [15, 5] can be useful for selecting multiprogrammed workloads for evaluating the throughput of multicore processors. Computer architects usually study multiprogrammed workloads by considering a set of benchmarks and some combinations of these benchmarks. However, there is no standard method for selecting such sample, and different authors have used different methods. The choice of a particular sample impacts the conclusions of a study. Using BADCO, we propose and compare different sampling methods for defining multiprogrammed workloads for computer architecture. We show that random sampling, the simplest method, is robust to define a representative sample of workloads, provided the sample is big enough. We propose a method for estimating the required sample size based on fast approximate simulation.

Modeling multi-threaded programs execution time in the many-core era

Estimating the potential performance of parallel applications on the yet-to-be-designed future many cores is very speculative. The simple models proposed by Amdahl’s law (fixed input problem size) or Gustafson’s law (fixed number of cores) do not capture the scaling behaviour of a multi-threaded (MT) application leading to over estimation of performance in the many-core era. In [25, 2], we propose a more refined but still tractable, high level empirical performance model for multi-threaded applications, the Serial/Parallel Scaling (SPS) Model to study the scalability and performance of application in many-core era. SPS model learns the application behavior on a given architecture and provides estimates of the performance in future many-cores. Considering both input problem size and the number of cores in modeling, SPS model can help in making high level decisions on the design choice of future many-core applications and architecture. SPS was validated on the Many-Integrated Cores (MIC) Xeon-Phi with 240 logical cores.

5 Improving sequential performance through memoization

Many applications perform repetitive computations, even when properly programmed and optimized. Performance can be improved by caching results of pure functions, and retrieving them instead of recomputing a result (a technique called memoization). We propose [13] a simple technique for enabling software memoization of any dynamically linked pure function and we illustrate our framework using a set of computationally expensive pure functions – the transcendental functions. Our technique does not need the availability of source code and thus can be applied even to commercial applications as well as applications with legacy codes. As far as users are concerned, enabling memoization is as simple as setting an environment variable. Substantial performance benefit can be obtained on applications making extensive use of pure functions.

DAL related publications

Doctoral Dissertations and Habilitation Theses

[1] B. Narasimha Swamy, Exploiting heterogeneous manycores on sequential code, Theses, UNIVERSITE DE RENNES 1, March 2015, [hal:tel-01126807].

[2] S. N. Natarajan, Modeling performance of serial and parallel sections of multi-threaded programs in manycore era, Theses, INRIA Rennes – Bretagne Atlantique and University of Rennes 1, France, June 2015, [hal:tel-01170039].

[3] A. Perais, Increasing the Performance of Superscalar Processors through Value Prediction, Theses, Rennes 1, September 2015, [hal:tel-01235370].

[4] N. Prémillieu, Increase Sequential Performance in the Manycore Era, Theses, Université Rennes 1, December 2013, [hal:tel-00916589].

[5] R. A. Velásquez Vélez, Behavioral Application-dependent Superscalar Core Modeling, Theses, Université Rennes 1, April 2013, [hal:tel-00908544].

Articles in International Journals

[6] P. Michaud, A. Mondelli, A. Seznec, Revisiting Clustered Microarchitecture for Future Superscalar Cores: A Case for Wide Issue Clusters, ACM Transactions on Architecture and Code Optimization (TACO) 13, 3, August 2015, p. 22, [doi:10.1145/2800787], [hal:hal-01193178].

[7] A. Perais, A. Seznec, EOLE: Toward a Practical Implementation of Value Prediction, IEEE Micro 35, 3, June 2015, p. 114 – 124, [doi:10.1109/MM.2015.45], [hal: hal-01193287].

[8] A. Perais, A. Seznec, EOLE: Combining Static and Dynamic Scheduling through Value Prediction to Reduce Complexity and Increase Performance, TOCS – ACM Transactions on Computer Systems, February 2016, p. 34, [hal:hal-01259139].

[9] N. Prémillieu, A. Seznec, SYRANT: SYmmetric Resource Allocation on Not-taken and Taken Paths, ACM Transactions on Architecture and Code Optimization – HIPEAC Papers 8, 4, January 2012, p. Article No.: 43, [doi:10.1145/2086696.2086722], [hal: inria-00539647].

[10] N. Prémillieu, A. Seznec, Efficient Out-of-Order Execution of Guarded ISAs, ACM Transactions on Architecture and Code Optimization (TACO) , December 2014, p. 21, [doi:10.1145/2677037], [hal:hal-01103230].

[11] S. Sardashti, A. Seznec, D. A. Wood, Yet Another Compressed Cache: a Low Cost Yet Effective Compressed Cache, ACM Transactions on Architecture and Code Optimization, September 2016, p. 25, [hal:hal-01354248].

[12] A. Seznec, J. San Miguel, J. Albericio, Practical Multidimensional Branch Prediction, IEEE Micro, 2016, [doi:10.1109/MM.2016.33], [hal:hal-01330510].

[13] A. Suresh, B. Narasimha Swamy, E. Rohou, A. Seznec, Intercepting Functions for Memoization: A Case Study Using Transcendental Functions, ACM Transactions on Architecture and Code Optimization (TACO) 12, 2, July 2015, p. 23, [doi:10.1145/ 2751559], [hal:hal-01178085].

[14] H. Vandierendonck, A. Seznec, Fairness Metrics for Multithreaded Processors, IEEE Computer Architecture Letters, January 2011, [doi:10.1109/L-CA.2011.1], [hal: inria-00564560].

[15] R. A. Velasquez, P. Michaud, A. Seznec, BADCO: Behavioral Application-Dependent Superscalar Core Models, International Journal of Parallel Programming, October 2013, [doi:10.1007/s10766-013-0278-1], [hal:hal-00907659].

International Conference/Proceedings

[16] S. Eyerman, P. Michaud, W. Rogiest, Revisiting Symbiotic Job Scheduling, in: IEEE International Symposium on Performance Analysis of Systems and Software, Philadelphia, United States, March 2015, [doi:10.1109/ISPASS.2015.7095791], [hal: hal-01139807].

[17] S. Kalathingal, S. Collange, B. Swamy, A. Seznec, Dynamic Inter-Thread Vectorization Architecture: extracting DLP from TLP, in: Proceedings of the International Symposium on Computer Architecture and High Performance Computing (SBAC-PAD), Oct 2016.

[18] K. Kuroyanagi, A. Seznec, Service Value Aware Memory Scheduler by Estimating Request Weight and Using per-Thread Traffic Lights, in: 3rd JILP Workshop on Computer Architecture Competitions (JWAC-3): Memory Scheduling Championship (MSC), Rajeev Balasubramonian (Univ. of Utah), Niladrish Chatterjee (Univ. of Utah), Zeshan Chishti (Intel), Portland, United States, June 2012, [hal:hal-00746951].

[19] P. Michaud, A. Seznec, Pushing the branch predictability limits with the multi-poTAGE+SC predictor, in: 4th JILP Workshop on Computer Architecture Competitions (JWAC-4): Championship Branch Prediction (CBP-4), Minneapolis, United States, June 2014, [hal:hal-01087719].

[20] P. Michaud, Five poTAGEs and a COLT for an unrealistic predictor, in: 4th JILP Workshop on Computer Architecture Competitions (JWAC-4): Championship Branch Prediction (CBP-4), Minneapolis, United States, June 2014, [hal:hal-01087692].

[21] P. Michaud, A Best-Offset Prefetcher, in: 2nd Data Prefetching Championship, Portland, United States, June 2015, [hal:hal-01165600].

[22] P. Michaud, Best-Offset Hardware Prefetching, in: International Symposium on High-Performance Computer Architecture, Barcelona, Spain, March 2016, [hal: hal-01254863].

[23] B. Narasimha Swamy, A. Ketterlin, A. Seznec, Hardware/Software Helper Thread Prefetching On Heterogeneous Many Cores, in: 2014 IEEE 26th International Symposium on Computer Architecture and High Performance Computing (SBAC-PAD), Paris, France, October 2014, [doi:10.1109/SBAC-PAD.2014.39], [hal:hal-01087752].

[24] S. Narayanan, B. Narasimha Swamy, A. Seznec, Impact of serial scaling of multi-threaded programs in many-core era, in: WAMCA – 5th Workshop on Applications for Multi-Core Architectures, Paris, France, October 2014, [doi:10.1109/SBAC-PADW.2014.9], [hal:hal-01089446].

[25] S. N. Natarajan, B. Narasimha Swamy, A. Seznec, An Empirical High Level Performance Model For FutureMany-cores, in: Proceedings of the 12th ACM International Conference on Computing Frontiers, Ischia, Italy, 2015, [doi:10.1145/2742854.2742867], [hal:hal-01170038].

[26] S. N. Natarajan, A. Seznec, Sequential and Parallel Code Sections are Different: they may require different Processors, in: PARMA-DITAM ’15 – 6th Workshop on Parallel Programming and Run-Time Management Techniques for Many-core Architectures, 6th Workshop on Parallel Programming and Run-Time Management Techniques for Many-core Architectures and 4th Workshop on Design Tools and Architectures for Multicore Embedded Computing Platforms, ACM, p. 13–18, Amsterdam, Netherlands, January 2015, [doi: 10.1145/2701310.2701314], [hal:hal-01170061].

[27] B. Panda, A. Seznec, Dictionary Sharing: An Efficient Cache Compression Scheme for Compressed Caches, in: 49th Annual IEEE/ACM International Symposium on Microarchitecture, 2016, IEEE/ACM, Taipei, Taiwan, October 2016, [hal:hal-01354246].

[28] M.-M. Papadopoulou, X. Tong, A. Seznec, A. Moshovos, Prediction-based superpage-friendly TLB designs, in: 21st IEEE symposium on High Performance Computer Architecture, San Francisco, United States, 2015, [doi:10.1109/HPCA.2015.7056034], [hal:hal-01193176].

[29] A. Perais, F. Endo, A. Seznec, Register Sharing for Equality Prediction, in: 49th Annual IEEE/ACM International Symposium on Microarchitecture, 2016, IEEE/ACM, Taipei, Taiwan, October 2016, [hal:hal-01354246].

[30] A. Perais, A. Seznec, P. Michaud, A. Sembrant, E. Hagersten, Cost-Effective Speculative Scheduling in High Performance Processors, in: International Symposium on Computer Architecture, Proceedings of the International Symposium on Computer Architecture, 42, ACM/IEEE, p. 247–259, Portland, United States, June 2015, [doi: 10.1145/2749469.2749470], [hal:hal-01193233].

[31] A. Perais, A. Seznec, EOLE: Paving the Way for an Effective Implementation of Value Prediction, in: International Symposium on Computer Architecture, 42, ACM/IEEE, p. 481 – 492, Minneapolis, MN, United States, June 2014, [doi:10.1109/ISCA.2014.6853205], [hal:hal-01088130].

[32] A. Perais, A. Seznec, Practical data value speculation for future high-end processors, in: International Symposium on High Performance Computer Architecture, IEEE, p. 428 – 439, Orlando, FL, United States, February 2014, [doi:10.1109/HPCA.2014.6835952], [hal:hal-01088116].

[33] A. Perais, A. Seznec, BeBoP: A Cost Effective Predictor Infrastructure for Superscalar Value Prediction, in: International Symposium on High Performance Computer Architecture, 21, IEEE, p. 13 – 25 ), San Francisco, United States, February 2015, [doi: 10.1109/HPCA.2015.7056018], [hal:hal-01193175].

[34] A. Perais, A. Seznec, Cost Effective Physical Register Sharing, in: International Symposium on High Performance Computer Architecture, 22, IEEE, Barcelona, Spain, March 2016, [hal:hal-01259137].

[35] E. Rohou, B. Narasimha Swamy, A. Seznec, Branch Prediction and the Performance of Interpreters – Don’t Trust Folklore, in: International Symposium on Code Generation and Optimization, Burlingame, United States, February 2015, [hal: hal-01100647].

[36] S. Sardashti, A. Seznec, D. A. Wood, Skewed Compressed Cache, in: MICRO – 47th Annual IEEE/ACM International Symposium on Microarchitecture, Cambridge, United Kingdom, December 2014, [hal:hal-01088050].

[37] A. Sembrant, T. Carlson, E. Hagersten, D. Black-Shaffer, A. Perais, A. Seznec, P. Michaud, Long Term Parking (LTP): Criticality-aware Resource Allocation in OOO Processors, in: International Symposium on Microarchitecture, Micro 2015, Proceeding of the International Symposium on Microarchitecture, Micro 2015, ACM, p. 11, Honolulu, United States, December 2015, [hal:hal-01225019].

[38] A. Seznec, J. San Miguel, J. Albericio, The Inner Most Loop Iteration counter: a new dimension in branch history , in: 48th International Symposium On Microarchitecture, ACM, p. 11, Honolulu, United States, December 2015, [hal:hal-01208347].

[39] A. Seznec, A 64 Kbytes ISL-TAGE branch predictor, in: JWAC-2: Championship Branch Prediction, JILP, San Jose, United States, June 2011, [hal:hal-00639040].

[40] A. Seznec, A 64-Kbytes ITTAGE indirect branch predictor, in: JWAC-2: Championship Branch Prediction, JILP, San Jose, United States, June 2011, [hal:hal-00639041].

[41] A. Seznec, A New Case for the TAGE Branch Predictor, in: MICRO 2011 : The 44th Annual IEEE/ACM International Symposium on Microarchitecture, 2011, ACM (editor), ACM-IEEE, Porto Allegre, Brazil, December 2011, [hal:hal-00639193].

[42] A. Seznec, A New Case for the TAGE Branch Predictor, in: Proceedings of the 44th Annual IEEE/ACM International Symposium on Microarchitecture, MICRO-44, ACM, p. 117–127, New York, NY, USA, 2011, [doi:10.1145/2155620.2155635].

[43] A. Seznec, TAGE-SC-L Branch Predictors, in: JILP – Championship Branch Prediction, Minneapolis, United States, June 2014, [hal:hal-01086920].

[44] A. Seznec, Bank-interleaved cache or memory indexing does not require euclidean division, in: 11th Annual Workshop on Duplicating, Deconstructing and Debunking, Portland, United States, June 2015, [hal:hal-01208356].

[45] A. Sridharan, A. Seznec, Discrete Cache Insertion Policies for Shared Last Level Cache Management on Large Multicores, in: 30th IEEE International Parallel & Distributed Processing Symposium, Chicago, United States, May 2016, [hal:hal-01259626].

[46] R. A. Velasquez, P. Michaud, A. Seznec, BADCO: Behavioral Application-Dependent Superscalar Core Model, in: SAMOS XII: International Conference on Embedded Computer Systems: Architectures, Modeling and Simulation, Samos, Greece, July 2012, [hal:hal-00707346].

[47] R. A. Velasquez, P. Michaud, A. Seznec, Selecting Benchmark Combinations for the Evaluation of Multicore Throughput, in: International Symposium on Performance Analysis of Systems and Software, Austin, United States, April 2013, [doi:10.1109/ISPASS. 2013.6557168], [hal:hal-00788824].